TL;DR

Real-time video translation is a technology for instant dubbing of live broadcasts with just 10-15 seconds of latency. In April 2025, Deepdub Live launched at NAB Show—the first enterprise solution for live dubbing with emotion preservation. In this article, we break down how streaming translation works, compare the TOP-3 services, and showcase real-world use cases for streams, webinars, and sports broadcasts.

What is Real-Time Video Translation and Why You Need It 🎯

Real-time video translation (or live dubbing) is a technology for automatic translation of live broadcasts in real-time with minimal latency. While traditional dubbing takes 6-12 weeks, here the result appears in 10-15 seconds.

2025 Breakthrough

On April 3, 2025, Deepdub unveiled the world's first enterprise solution for live dubbing with emotional transfer at NAB Show—Deepdub Live. This marked the beginning of mass adoption of the technology in sports broadcasts, streams, and corporate webinars.

Who is this for?

Streamers and YouTube Channels

- Reach international audiences without language barriers

- Translate live streams into 100+ languages simultaneously

- +300% reach while maintaining content quality

Webinar Organizers

- Multilingual conferences without hiring interpreters

- Save up to 90% on simultaneous interpretation services

- Scale to thousands of participants

Sports Broadcasting

- Translate commentary while preserving energy and emotions

- Real-world cases: MLS, NASCAR, Australian Open

- Monetize international markets

News Media

- Instant delivery of breaking news in 150+ languages

- 24/7 automated localization

- Broadcast-grade audio quality (48kHz)

How Real-Time Translation Technology Works 🔬

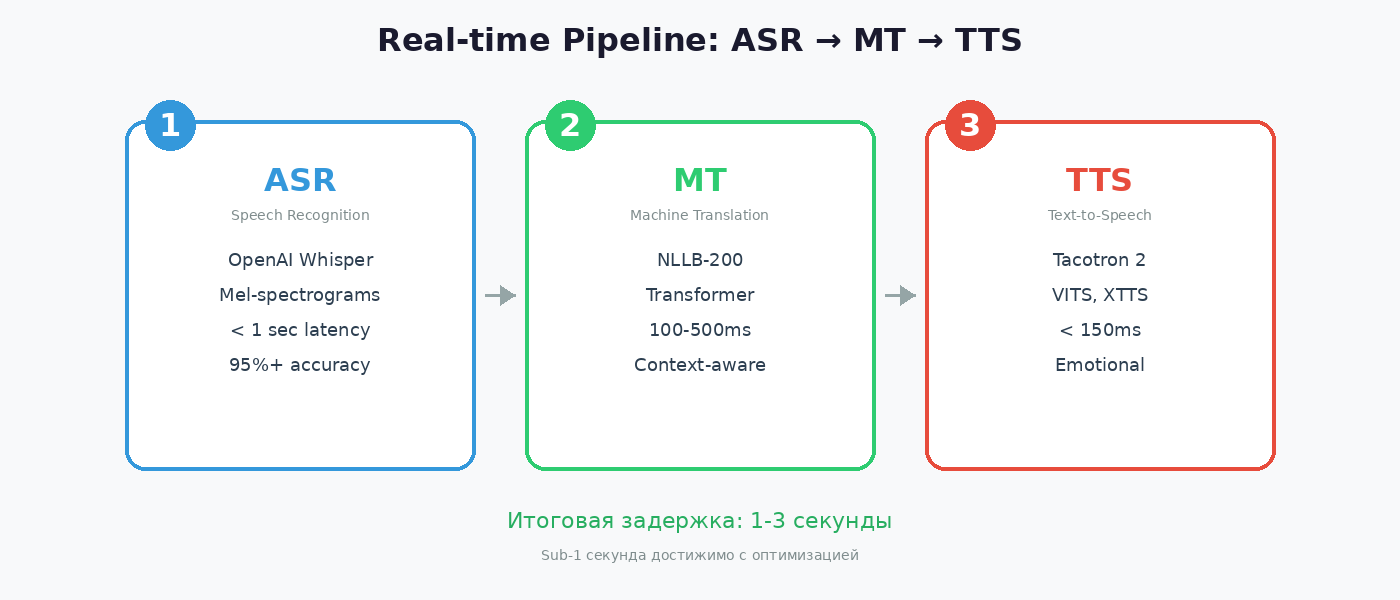

Real-time video translation operates on an ASR → MT → TTS pipeline, combining three key AI technologies into a unified pipeline with minimal latency.

Complete Technical Pipeline

🎤 Stage 1: Automatic Speech Recognition (ASR)

The first stage converts the audio track into text transcription.

- Technology: OpenAI Whisper Large V3 (industry standard)

- Speed: Less than 1 second for partial results

- Accuracy: 95%+ for clean English audio

- Processing: Audio is split into 30-second segments

Important: ASR quality is critical for the entire process. The system analyzes mel-spectrograms (visual representation of frequencies) and uses multi-GPU acceleration to minimize latency.

🌐 Stage 2: Machine Translation (MT)

Neural translation using Transformer architecture and attention mechanisms.

- Models: Meta NLLB-200 (200 languages), M2M-100, Gemini-based LLM

- Speed: 100-500 milliseconds per sentence

- Feature: Context-aware translation considers entire utterance context

- Customization: Glossary support for technical terms

🗣️ Stage 3: Text-to-Speech Synthesis (TTS)

Natural voice generation with emotional expression preservation.

- Technologies: Tacotron 2, VITS, Coqui XTTS v2, ElevenLabs models

- Speed: Less than 150ms for streaming responses

- Quality: Nearly indistinguishable from human voices

- Emotions: Dynamic adjustment of tone, pace, pitch, and intensity

Breakthrough: Emotional TTS

Deepdub Live's eTTS™ technology captures high-energy commentary, dramatic pauses, and urgency. The system is trained on diverse emotional datasets and generates realistic intonations, stress patterns, and breathing pauses.

TOP-3 Real-Time Video Translation Services 🏆

In the 2025 market, several enterprise solutions for live dubbing stand out. Let's look at the top three leaders.

🥇 Deepdub Live — Technology Flagship

Launch: April 3, 2025 at NAB Show (Las Vegas)

Positioning: World's first AI solution for live dubbing with emotional transfer and enterprise scalability

Key Features:

- Latency: 15 seconds (target — 10 seconds)

- Languages: 100+ languages and dialects

- Audio Quality: 48kHz broadcast-grade audio

- Technology: Proprietary eTTS™ (Emotive Text-to-Speech)

- Integration: AWS Elemental MediaPackage, SRT, HLS, MPEG-DASH

Advantages:

- Dynamic real-time adjustment of tone, pace, and pitch

- Voice Cloning + Voice Bank with licensed voices

- Frame-accurate synchronization without noticeable delays

- TPN Gold Shield and SOC 2 compliant security

Pricing

Enterprise model

Custom pricing

Contact sales team

14-day trial for businesses

Target Applications

- Sports commentary

- Esports (live shoutcasting)

- Breaking news

- YouTube streams

- Conferences and events

- Multilingual news channels

🥈 CAMB.AI — Sports Broadcasting Leader

Backing: Comcast NBCUniversal SportsTech 2025, Accelerate Ventures

Unique Feature: Only voice model on both AWS Bedrock AND Google Cloud Vertex AI

Technology:

- MARS5 model: Voice cloning from 2-3 sec audio

- BOLI translation: Advanced machine translation

- Languages: 150+ languages

Products:

- Studio: Automated dubbing

- Live Translation: For news and sports (launch at IBC 2025)

Historic Achievements:

- April 2, 2024: First AI-powered multilingual livestream of MLS game

- 2025: Australian Open, NASCAR Mexico City Cup Race, Ligue 1

Pricing

Lite: $14.99/month

(5 minutes)

Advanced: $150/month

(30 minutes)

Advantage: CAMB.AI specializes in preserving the emotional energy of sports commentators, making it the ideal choice for sports broadcasts and esports.

🥉 AI-Media LEXI Voice — Professional Solution

Launch: NAB Show 2025 (US), IBC 2025 (Europe)

Key Partnership: AudioShake for real-time DME separation (Dialogue, Music, Effects)

Unique Technology:

- Isolates commentary from crowd noise, field sounds, music

- LEXI Voice generates accurate real-time subtitles

- Synthetic AI announcers create multilingual translation

- Translated voices are remixed over original background

Specifications:

- Languages: 100+ languages with ultra-low latency

- Customization: Customizable AI voices

- Cost Reduction: 90% vs traditional methods

Pricing

$30/hour

+ standard LEXI captioning rate

Case Study: Major US Broadcaster (2021)

Scale: 2,500+ hours captioned for global sporting event

- 11,000+ athletes, 339 events

- Up to 50 simultaneous streams

- Enterprise-grade reliability

Practical Applications: Real-World Use Cases 📊

1. YouTube and Twitch Streams

Real-time translation opens up access to international audiences for streamers without language barriers.

Solutions for Streamers

- LocalVocal (OBS plugin): Free, fully local, 100+ languages

- Maestra AI: $39-159/month, integration with OBS/vMix/Twitch

- Lingo Echo (Twitch): €6.95/month, 25+ languages, interactive games

Results

- +300% audience reach

- Growth in subscriptions and donations

- Cross-cultural communities

- Monetization of international markets

2. Corporate Webinars and Conferences

Multilingual events without hiring simultaneous interpreters have become reality thanks to AI.

Measured Impact (Interprefy Translation Study, 2025)

prioritize translation accuracy

report increased participant engagement

report growth in multilingual participation

TOP Solutions for Webinars:

- Wordly AI: 3,000+ language pairs, integration with Zoom/Teams/Cvent, education discounts

- KUDO: 200+ languages, hybrid AI + professional interpreters, native Teams integration

- JotMe: #1 rated 2025, 77+ languages, no bots, from $9/month

Cost Savings: $172 per meeting per language compared to third-party interpreter services (Metrigy study). For a 3-language conference, savings amount to $516 per session.

3. Sports Broadcasting

Revolution in sports broadcasting: commentary in viewers' native languages while preserving the energy of the original.

Historic Case: CAMB.AI × MLS

April 2, 2024, Generation Adidas Cup

First AI-powered multilingual live sports broadcast in history

- Languages: English (original) + French, Spanish, Portuguese (real-time)

- Technology: Emotion-rich voice cloning, preserving commentator's passion

- Impact: "Groundbreaking moment" making sports "truly borderless"

Other 2025 Deployments:

- Australian Open: Post-match press conferences (viral Djokovic moment in Spanish)

- NASCAR Mexico City: Cup Race translation to Spanish

- Ligue 1: French football top division (CAMB.AI)

4. News Media

Instant delivery of breaking news in 150+ languages without translation delays.

- CAMB.AI: News product launch in October 2025

- AI-Media: Broadcast-grade solutions for leading global broadcasters

- Samsung Live Translate: Real-time translation in TV (announced CES 2025)

Philosophy: "Making news accessible to everyone... AI as a positive force for trusted journalism" — Akshat Prakash, CTO CAMB.AI

Real-Time Translation Technology Limitations ⚠️

Despite impressive progress, the technology has objective limitations.

1. Technical Challenges

- Simultaneous Speakers: Most systems struggle with overlapping speech. Solution: Maestra AI, ElevenLabs automatically detect speakers, but overlapping remains challenging.

- Background Noise: Clean audio is critically important. Recommendations: headset, noise-canceling microphones, direct audio connection. Solution: AudioShake DME isolates dialogue from crowd noise.

- Accents and Dialects: Non-native accents reduce accuracy. English optimization: most models work better on English due to training data bias. Add 300-500ms latency for other languages.

- Context: Real-time systems have limited context window (few hundred milliseconds), leading to interpretation errors, punctuation, and grammar issues.

2. Quality Limitations

- Emotional Depth: AI still falls short of human actors in spontaneous creativity and adaptability

- Cultural Adaptation: Idioms and cultural references require custom glossaries (up to 3,000 phrases in Wordly)

- Voice Naturalness: Non-English voices sometimes sound robotic (though improving)

- Lip-sync: Limited in real-time due to 200-400ms processing delay

2025 Solution: Hybrid Approach

Combination of AI (bulk translation automation) + Human (cultural nuance, emotional fine-tuning, QA) provides 80% workload reduction while maintaining quality. Examples: KUDO, Interprefy, Papercup.

What About Recorded Content? 🎬

Real-time translation is ideal for live broadcasts, but for pre-recorded content (VOD), more advanced solutions exist without latency constraints.

Speeek.io — Professional AI Dubbing for Video

For YouTube channels, online courses, corporate presentations, and marketing videos, we offer a complete solution with perfect voice quality and lip synchronization.

Why Speeek.io for VOD Content?

- No Limitations: Videos of any length

- Perfect Lip-Sync: Professional synchronization

- Premium Quality: 94-97% translation accuracy

- 20+ Languages: English, Spanish, German...

- AI Editor: Full control over results

- From $0.40/min: 10x cheaper

Real-time (Live)

For live broadcasts

- 10-15 seconds latency

- Automatic process

- Limited quality control

- Basic lip-sync

Use Cases: Streams, webinars, sports, news

VOD Dubbing (Speeek.io)

For recorded videos

- Processing 2-5 minutes per 10 min video

- Full control via AI editor

- Premium voice quality

- Professional lip-sync

Use Cases: YouTube, courses, presentations, advertising

Compare 5 Best Video Translation Services →

❓ Frequently Asked Questions

Translate YouTube Videos with Professional Quality

Automatic AI dubbing in 20+ languages with lip synchronization and natural voices

Try speeek.io for FreeConclusion

Real-time video translation in 2025 has reached production-ready status. With the launch of Deepdub Live in April, the technology became available for enterprise applications in sports broadcasting, news media, and corporate events.

Key Takeaways:

- Technology has matured: Latency decreased from 3-5 seconds (2023) to sub-1 second for some applications

- Mass adoption: From Microsoft Edge to Samsung TV—built-in translation is becoming standard

- Economics have changed: 90%+ cost reduction vs traditional methods

- Real-time for live, dubbing for VOD: Each approach has its niche

For live broadcasts, choose enterprise solutions (Deepdub Live, CAMB.AI, AI-Media LEXI Voice). For pre-recorded content—professional platforms like Speeek.io with perfect quality and full control.